Research

Recent research publications

2024

LLMeBench: A Flexible Framework for Accelerating LLMs Benchmarking

The recent development and success of Large Language Models (LLMs) necessitate an evaluation of their performance across diverse NLP tasks in different languages. Although several frameworks have been developed and made publicly available, their customization capabilities for specific tasks and datasets are often complex for different users. In this study, we introduce the LLMeBench framework, which can be seamlessly customized to evaluate LLMs for any NLP task, regardless of language. The framework features generic dataset loaders, several model providers, and pre-implements most standard evaluation metrics. It supports in-context learning with zero- and few-shot settings. A specific dataset and task can be evaluated for a given LLM in less than 20 lines of code while allowing full flexibility to extend the framework for custom datasets, models, or tasks. The framework has been tested on 31 unique NLP tasks using 53 publicly available datasets within 90 experimental setups, involving approximately 296K data points. We open-sourced LLMeBench for the community (https://github.com/qcri/LLMeBench/) and a video demonstrating the framework is available online (https://youtu.be/9cC2m_abk3A).

@inproceedings{dalvi-etal-2024-llmebench,

title = "{LLM}e{B}ench: A Flexible Framework for Accelerating {LLM}s Benchmarking",

author = "Dalvi, Fahim and

Hasanain, Maram and

Boughorbel, Sabri and

Mousi, Basel and

Abdaljalil, Samir and

Nazar, Nizi and

Abdelali, Ahmed and

Chowdhury, Shammur Absar and

Mubarak, Hamdy and

Ali, Ahmed and

Hawasly, Majd and

Durrani, Nadir and

Alam, Firoj",

editor = "Aletras, Nikolaos and

De Clercq, Orphee",

booktitle = "Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations",

month = mar,

year = "2024",

address = "St. Julians, Malta",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.eacl-demo.23",

pages = "214--222",

abstract = "The recent development and success of Large Language Models (LLMs) necessitate an evaluation of their performance across diverse NLP tasks in different languages. Although several frameworks have been developed and made publicly available, their customization capabilities for specific tasks and datasets are often complex for different users. In this study, we introduce the LLMeBench framework, which can be seamlessly customized to evaluate LLMs for any NLP task, regardless of language. The framework features generic dataset loaders, several model providers, and pre-implements most standard evaluation metrics. It supports in-context learning with zero- and few-shot settings. A specific dataset and task can be evaluated for a given LLM in less than 20 lines of code while allowing full flexibility to extend the framework for custom datasets, models, or tasks. The framework has been tested on 31 unique NLP tasks using 53 publicly available datasets within 90 experimental setups, involving approximately 296K data points. We open-sourced LLMeBench for the community (https://github.com/qcri/LLMeBench/) and a video demonstrating the framework is available online (https://youtu.be/9cC2m{\_}abk3A).",

}

Scaling up Discovery of Latent Concepts in Deep NLP Models

Despite the revolution caused by deep NLP models, they remain black boxes, necessitating research to understand their decision-making processes. A recent work by Dalvi et al. (2022) carried out representation analysis through the lens of clustering latent spaces within pre-trained models (PLMs), but that approach is limited to small scale due to the high cost of running Agglomerative hierarchical clustering. This paper studies clustering algorithms in order to scale the discovery of encoded concepts in PLM representations to larger datasets and models. We propose metrics for assessing the quality of discovered latent concepts and use them to compare the studied clustering algorithms. We found that K-Means-based concept discovery significantly enhances efficiency while maintaining the quality of the obtained concepts. Furthermore, we demonstrate the practicality of this newfound efficiency by scaling latent concept discovery to LLMs and phrasal concepts.

@inproceedings{hawasly-etal-2024-scaling,

title = "Scaling up Discovery of Latent Concepts in Deep {NLP} Models",

author = "Hawasly, Majd and

Dalvi, Fahim and

Durrani, Nadir",

editor = "Graham, Yvette and

Purver, Matthew",

booktitle = "Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = mar,

year = "2024",

address = "St. Julian{'}s, Malta",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.eacl-long.48",

pages = "793--806",

abstract = "Despite the revolution caused by deep NLP models, they remain black boxes, necessitating research to understand their decision-making processes. A recent work by Dalvi et al. (2022) carried out representation analysis through the lens of clustering latent spaces within pre-trained models (PLMs), but that approach is limited to small scale due to the high cost of running Agglomerative hierarchical clustering. This paper studies clustering algorithms in order to scale the discovery of encoded concepts in PLM representations to larger datasets and models. We propose metrics for assessing the quality of discovered latent concepts and use them to compare the studied clustering algorithms. We found that K-Means-based concept discovery significantly enhances efficiency while maintaining the quality of the obtained concepts. Furthermore, we demonstrate the practicality of this newfound efficiency by scaling latent concept discovery to LLMs and phrasal concepts.",

}

LAraBench: Benchmarking Arabic AI with Large Language Models

Recent advancements in Large Language Models (LLMs) have significantly influenced the landscape of language and speech research. Despite this progress, these models lack specific benchmarking against state-of-the-art (SOTA) models tailored to particular languages and tasks. LAraBench addresses this gap for Arabic Natural Language Processing (NLP) and Speech Processing tasks, including sequence tagging and content classification across different domains. We utilized models such as GPT-3.5-turbo, GPT-4, BLOOMZ, Jais-13b-chat, Whisper, and USM, employing zero and few-shot learning techniques to tackle 33 distinct tasks across 61 publicly available datasets. This involved 98 experimental setups, encompassing ~296K data points, ~46 hours of speech, and 30 sentences for Text-to-Speech (TTS). This effort resulted in 330+ sets of experiments. Our analysis focused on measuring the performance gap between SOTA models and LLMs. The overarching trend observed was that SOTA models generally outperformed LLMs in zero-shot learning, with a few exceptions. Notably, larger computational models with few-shot learning techniques managed to reduce these performance gaps. Our findings provide valuable insights into the applicability of LLMs for Arabic NLP and speech processing tasks.

@inproceedings{abdelali-etal-2024-larabench,

title = "{LA}ra{B}ench: Benchmarking {A}rabic {AI} with Large Language Models",

author = "Abdelali, Ahmed and

Mubarak, Hamdy and

Chowdhury, Shammur and

Hasanain, Maram and

Mousi, Basel and

Boughorbel, Sabri and

Abdaljalil, Samir and

El Kheir, Yassine and

Izham, Daniel and

Dalvi, Fahim and

Hawasly, Majd and

Nazar, Nizi and

Elshahawy, Youssef and

Ali, Ahmed and

Durrani, Nadir and

Milic-Frayling, Natasa and

Alam, Firoj",

editor = "Graham, Yvette and

Purver, Matthew",

booktitle = "Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = mar,

year = "2024",

address = "St. Julian{'}s, Malta",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.eacl-long.30",

pages = "487--520",

abstract = "Recent advancements in Large Language Models (LLMs) have significantly influenced the landscape of language and speech research. Despite this progress, these models lack specific benchmarking against state-of-the-art (SOTA) models tailored to particular languages and tasks. LAraBench addresses this gap for Arabic Natural Language Processing (NLP) and Speech Processing tasks, including sequence tagging and content classification across different domains. We utilized models such as GPT-3.5-turbo, GPT-4, BLOOMZ, Jais-13b-chat, Whisper, and USM, employing zero and few-shot learning techniques to tackle 33 distinct tasks across 61 publicly available datasets. This involved 98 experimental setups, encompassing {\textasciitilde}296K data points, {\textasciitilde}46 hours of speech, and 30 sentences for Text-to-Speech (TTS). This effort resulted in 330+ sets of experiments. Our analysis focused on measuring the performance gap between SOTA models and LLMs. The overarching trend observed was that SOTA models generally outperformed LLMs in zero-shot learning, with a few exceptions. Notably, larger computational models with few-shot learning techniques managed to reduce these performance gaps. Our findings provide valuable insights into the applicability of LLMs for Arabic NLP and speech processing tasks.",

}

2023

Evaluating Neuron Interpretation Methods of NLP Models

Neuron interpretation offers valuable insights into how knowledge is structured within a deep neural network model. While a number of neuron interpretation methods have been proposed in the literature, the field lacks a comprehensive comparison among these methods. This gap hampers progress due to the absence of standardized metrics and benchmarks. The commonly used evaluation metric has limitations, and creating ground truth annotations for neurons is impractical. Addressing these challenges, we propose an evaluation framework based on voting theory. Our hypothesis posits that neurons consistently identified by different methods carry more significant information. We rigorously assess our framework across a diverse array of neuron interpretation methods. Notable findings include: i) despite the theoretical differences among the methods, neuron ranking methods share over 60% of their rankings when identifying salient neurons, ii) the neuron interpretation methods are most sensitive to the last layer representations, iii) Probeless neuron ranking emerges as the most consistent method.

@inproceedings{NEURIPS2023_eef6cb60,

author = {Fan, Yimin and Dalvi, Fahim and Durrani, Nadir and Sajjad, Hassan},

booktitle = {Advances in Neural Information Processing Systems},

editor = {A. Oh and T. Naumann and A. Globerson and K. Saenko and M. Hardt and S. Levine},

pages = {75644--75668},

publisher = {Curran Associates, Inc.},

title = {Evaluating Neuron Interpretation Methods of NLP Models},

url = {https://proceedings.neurips.cc/paper_files/paper/2023/file/eef6cb60fd59b32d35718e176b4b08d6-Paper-Conference.pdf},

volume = {36},

year = {2023}

}

Discovering Salient Neurons in Deep NLP models

While a lot of work has been done in understanding representations learned within deep NLP models and what knowledge they capture, work done towards analyzing individual neurons is relatively sparse. We present a technique called Linguistic Correlation Analysis to extract salient neurons in the model, with respect to any extrinsic property, with the goal of understanding how such knowledge is preserved within neurons. We carry out a fine-grained analysis to answer the following questions: (i) can we identify subsets of neurons in the network that learn a specific linguistic property? (ii) is a certain linguistic phenomenon in a given model localized (encoded in few individual neurons) or distributed across many neurons? (iii) how redundantly is the information preserved? (iv) how does fine-tuning pre-trained models towards downstream NLP tasks impact the learned linguistic knowledge? (v) how do models vary in learning different linguistic properties? Our data-driven, quantitative analysis illuminates interesting findings: (i) we found small subsets of neurons that can predict different linguistic tasks; (ii) neurons capturing basic lexical information, such as suffixation, are localized in the lowermost layers; (iii) neurons learning complex concepts, such as syntactic role, are predominantly found in middle and higher layers; (iv) salient linguistic neurons are relocated from higher to lower layers during transfer learning, as the network preserves the higher layers for task-specific information; (v) we found interesting differences across pre-trained models regarding how linguistic information is preserved within them; and (vi) we found that concepts exhibit similar neuron distribution across different languages in the multilingual transformer models. Our code is publicly available as part of the NeuroX toolkit (Dalvi et al., 2023).

@article{JMLR:v24:23-0074,

author = {Nadir Durrani and Fahim Dalvi and Hassan Sajjad},

title = {Discovering Salient Neurons in deep NLP models},

journal = {Journal of Machine Learning Research},

year = {2023},

volume = {24},

number = {362},

pages = {1--40},

url = {http://jmlr.org/papers/v24/23-0074.html}

}

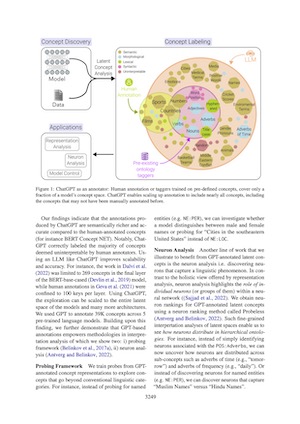

Can LLMs Facilitate Interpretation of Pre-trained Language Models?

Work done to uncover the knowledge encoded within pre-trained language models rely on annotated corpora or human-in-the-loop methods. However, these approaches are limited in terms of scalability and the scope of interpretation. We propose using a large language model, ChatGPT, as an annotator to enable fine-grained interpretation analysis of pre-trained language models. We discover latent concepts within pre-trained language models by applying agglomerative hierarchical clustering over contextualized representations and then annotate these concepts using ChatGPT. Our findings demonstrate that ChatGPT produces accurate and semantically richer annotations compared to human-annotated concepts. Additionally, we showcase how GPT-based annotations empower interpretation analysis methodologies of which we demonstrate two: probing frameworks and neuron interpretation. To facilitate further exploration and experimentation in the field, we make available a substantial ConceptNet dataset (TCN) comprising 39,000 annotated concepts.

@inproceedings{mousi-etal-2023-llms,

title = "Can {LLM}s Facilitate Interpretation of Pre-trained Language Models?",

author = "Mousi, Basel and

Durrani, Nadir and

Dalvi, Fahim",

editor = "Bouamor, Houda and

Pino, Juan and

Bali, Kalika",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-main.196",

doi = "10.18653/v1/2023.emnlp-main.196",

pages = "3248--3268",

abstract = "Work done to uncover the knowledge encoded within pre-trained language models rely on annotated corpora or human-in-the-loop methods. However, these approaches are limited in terms of scalability and the scope of interpretation. We propose using a large language model, ChatGPT, as an annotator to enable fine-grained interpretation analysis of pre-trained language models. We discover latent concepts within pre-trained language models by applying agglomerative hierarchical clustering over contextualized representations and then annotate these concepts using ChatGPT. Our findings demonstrate that ChatGPT produces accurate and semantically richer annotations compared to human-annotated concepts. Additionally, we showcase how GPT-based annotations empower interpretation analysis methodologies of which we demonstrate two: probing frameworks and neuron interpretation. To facilitate further exploration and experimentation in the field, we make available a substantial ConceptNet dataset (TCN) comprising 39,000 annotated concepts.",

}

NeuroX Library for Neuron Analysis of Deep NLP Models

Neuron analysis provides insights into how knowledge is structured in representations and discovers the role of neurons in the network. In addition to developing an understanding of our models, neuron analysis enables various applications such as debiasing, domain adaptation and architectural search. We present NeuroX, a comprehensive open-source toolkit to conduct neuron analysis of natural language processing models. It implements various interpretation methods under a unified API, and provides a framework for data processing and evaluation, thus making it easier for researchers and practitioners to perform neuron analysis. The Python toolkit is available at https://www.github.com/fdalvi/NeuroX.

@inproceedings{dalvi-etal-2023-neurox,

title = "{N}euro{X} Library for Neuron Analysis of Deep {NLP} Models",

author = "Dalvi, Fahim and

Sajjad, Hassan and

Durrani, Nadir",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-demo.21",

doi = "10.18653/v1/2023.acl-demo.21",

pages = "226--234",

abstract = "Neuron analysis provides insights into how knowledge is structured in representations and discovers the role of neurons in the network. In addition to developing an understanding of our models, neuron analysis enables various applications such as debiasing, domain adaptation and architectural search. We present NeuroX, a comprehensive open-source toolkit to conduct neuron analysis of natural language processing models. It implements various interpretation methods under a unified API, and provides a framework for data processing and evaluation, thus making it easier for researchers and practitioners to perform neuron analysis. The Python toolkit is available at https://www.github.com/fdalvi/NeuroX.Demo Video available at: https://youtu.be/mLhs2YMx4u8",

}

NxPlain: A Web-based Tool for Discovery of Latent Concepts

The proliferation of deep neural networks in various domains has seen an increased need for the interpretability of these models, especially in scenarios where fairness and trust are as important as model performance. A lot of independent work is being carried out to: i) analyze what linguistic and non-linguistic knowledge is learned within these models, and ii) highlight the salient parts of the input. We present NxPlain, a web-app that provides an explanation of a model{'}s prediction using latent concepts. NxPlain discovers latent concepts learned in a deep NLP model, provides an interpretation of the knowledge learned in the model, and explains its predictions based on the used concepts. The application allows users to browse through the latent concepts in an intuitive order, letting them efficiently scan through the most salient concepts with a global corpus-level view and a local sentence-level view. Our tool is useful for debugging, unraveling model bias, and for highlighting spurious correlations in a model. A hosted demo is available here: https://nxplain.qcri.org

@inproceedings{dalvi-etal-2023-nxplain,

title = "{N}x{P}lain: A Web-based Tool for Discovery of Latent Concepts",

author = "Dalvi, Fahim and

Durrani, Nadir and

Sajjad, Hassan and

Jaban, Tamim and

Husaini, Mus{'}ab and

Abbas, Ummar",

booktitle = "Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics: System Demonstrations",

month = may,

year = "2023",

address = "Dubrovnik, Croatia",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.eacl-demo.10",

doi = "10.18653/v1/2023.eacl-demo.10",

pages = "75--83",

abstract = "The proliferation of deep neural networks in various domains has seen an increased need for the interpretability of these models, especially in scenarios where fairness and trust are as important as model performance. A lot of independent work is being carried out to: i) analyze what linguistic and non-linguistic knowledge is learned within these models, and ii) highlight the salient parts of the input. We present NxPlain, a web-app that provides an explanation of a model{'}s prediction using latent concepts. NxPlain discovers latent concepts learned in a deep NLP model, provides an interpretation of the knowledge learned in the model, and explains its predictions based on the used concepts. The application allows users to browse through the latent concepts in an intuitive order, letting them efficiently scan through the most salient concepts with a global corpus-level view and a local sentence-level view. Our tool is useful for debugging, unraveling model bias, and for highlighting spurious correlations in a model. A hosted demo is available here: https://nxplain.qcri.org",

}

ConceptX: A Framework for Latent Concept Analysis

The opacity of deep neural networks remains a challenge in deploying solutions where explanation is as important as precision. We present ConceptX, a human-in-the-loop framework for interpreting and annotating latent representational space in pre-trained Language Models (pLMs). We use an unsupervised method to discover concepts learned in these models and enable a graphical interface for humans to generate explanations for the concepts. To facilitate the process, we provide auto-annotations of the concepts (based on traditional linguistic ontologies). Such annotations enable development of a linguistic resource that directly represents latent concepts learned within deep NLP models. These include not just traditional linguistic concepts, but also task-specific or sensitive concepts (words grouped based on gender or religious connotation) that helps the annotators to mark bias in the model. The framework consists of two parts (i) concept discovery and (ii) annotation platform.

@article{Alam_Dalvi_Durrani_Sajjad_Khan_Xu_2023,

title={ConceptX: A Framework for Latent Concept Analysis},

volume={37},

url={https://ojs.aaai.org/index.php/AAAI/article/view/27057},

DOI={10.1609/aaai.v37i13.27057},

abstractNote={The opacity of deep neural networks remains a challenge in deploying solutions where explanation is as important as precision. We present ConceptX, a human-in-the-loop framework for interpreting and annotating latent representational space in pre-trained Language Models (pLMs). We use an unsupervised method to discover concepts learned in these models and enable a graphical interface for humans to generate explanations for the concepts. To facilitate the process, we provide auto-annotations of the concepts (based on traditional linguistic ontologies). Such annotations enable development of a linguistic resource that directly represents latent concepts learned within deep NLP models. These include not just traditional linguistic concepts, but also task-specific or sensitive concepts (words grouped based on gender or religious connotation) that helps the annotators to mark bias in the model. The framework consists of two parts (i) concept discovery and (ii) annotation platform.},

number={13},

journal={Proceedings of the AAAI Conference on Artificial Intelligence},

author={Alam, Firoj and Dalvi, Fahim and Durrani, Nadir and Sajjad, Hassan and Khan, Abdul Rafae and Xu, Jia},

year={2023},

month={Sep.},

pages={16395-16397}

}

On the effect of dropping layers of pre-trained transformer models

Transformer-based NLP models are trained using hundreds of millions or even billions of parameters, limiting their applicability in computationally constrained environments. While the number of parameters generally correlates with performance, it is not clear whether the entire network is required for a downstream task. Motivated by the recent work on pruning and distilling pre-trained models, we explore strategies to drop layers in pre-trained models, and observe the effect of pruning on downstream GLUE tasks. We were able to prune BERT, RoBERTa and XLNet models up to 40%, while maintaining up to 98% of their original performance. Additionally we show that our pruned models are on par with those built using knowledge distillation, both in terms of size and performance. Our experiments yield interesting observations such as: (i) the lower layers are most critical to maintain downstream task performance, (ii) some tasks such as paraphrase detection and sentence similarity are more robust to the dropping of layers, and (iii) models trained using different objective function exhibit different learning patterns and w.r.t the layer dropping.

@article{SAJJAD2023101429,

title = {On the effect of dropping layers of pre-trained transformer models},

journal = {Computer Speech & Language},

volume = {77},

pages = {101429},

year = {2023},

issn = {0885-2308},

doi = {https://doi.org/10.1016/j.csl.2022.101429},

url = {https://www.sciencedirect.com/science/article/pii/S0885230822000596},

author = {Hassan Sajjad and Fahim Dalvi and Nadir Durrani and Preslav Nakov},

keywords = {Pre-trained transformer models, Efficient transfer learning, Interpretation and analysis},

abstract = {Transformer-based NLP models are trained using hundreds of millions or even billions of parameters, limiting their applicability in computationally constrained environments. While the number of parameters generally correlates with performance, it is not clear whether the entire network is required for a downstream task. Motivated by the recent work on pruning and distilling pre-trained models, we explore strategies to drop layers in pre-trained models, and observe the effect of pruning on downstream GLUE tasks. We were able to prune BERT, RoBERTa and XLNet models up to 40%, while maintaining up to 98% of their original performance. Additionally we show that our pruned models are on par with those built using knowledge distillation, both in terms of size and performance. Our experiments yield interesting observations such as: (i) the lower layers are most critical to maintain downstream task performance, (ii) some tasks such as paraphrase detection and sentence similarity are more robust to the dropping of layers, and (iii) models trained using different objective function exhibit different learning patterns and w.r.t the layer dropping.}

}

2022

On the Transformation of Latent Space in Fine-Tuned NLP Models

We study the evolution of latent space in fine-tuned NLP models. Different from the commonly used probing-framework, we opt for an unsupervised method to analyze representations. More specifically, we discover latent concepts in the representational space using hierarchical clustering. We then use an alignment function to gauge the similarity between the latent space of a pre-trained model and its fine-tuned version. We use traditional linguistic concepts to facilitate our understanding and also study how the model space transforms towards task-specific information. We perform a thorough analysis, comparing pre-trained and fine-tuned models across three models and three downstream tasks. The notable findings of our work are: i) the latent space of the higher layers evolve towards task-specific concepts, ii) whereas the lower layers retain generic concepts acquired in the pre-trained model, iii) we discovered that some concepts in the higher layers acquire polarity towards the output class, and iv) that these concepts can be used for generating adversarial triggers.

@inproceedings{durrani-etal-2022-transformation,

title = "On the Transformation of Latent Space in Fine-Tuned {NLP} Models",

author = "Durrani, Nadir and

Sajjad, Hassan and

Dalvi, Fahim and

Alam, Firoj",

booktitle = "Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2022",

address = "Abu Dhabi, United Arab Emirates",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.emnlp-main.97",

doi = "10.18653/v1/2022.emnlp-main.97",

pages = "1495--1516",

abstract = "We study the evolution of latent space in fine-tuned NLP models. Different from the commonly used probing-framework, we opt for an unsupervised method to analyze representations. More specifically, we discover latent concepts in the representational space using hierarchical clustering. We then use an alignment function to gauge the similarity between the latent space of a pre-trained model and its fine-tuned version. We use traditional linguistic concepts to facilitate our understanding and also study how the model space transforms towards task-specific information. We perform a thorough analysis, comparing pre-trained and fine-tuned models across three models and three downstream tasks. The notable findings of our work are: i) the latent space of the higher layers evolve towards task-specific concepts, ii) whereas the lower layers retain generic concepts acquired in the pre-trained model, iii) we discovered that some concepts in the higher layers acquire polarity towards the output class, and iv) that these concepts can be used for generating adversarial triggers.",

}

Post-hoc analysis of Arabic transformer models

Arabic is a Semitic language which is widely spoken with many dialects. Given the success of pre-trained language models, many transformer models trained on Arabic and its dialects have surfaced. While there have been an extrinsic evaluation of these models with respect to downstream NLP tasks, no work has been carried out to analyze and compare their internal representations. We probe how linguistic information is encoded in the transformer models, trained on different Arabic dialects. We perform a layer and neuron analysis on the models using morphological tagging tasks for different dialects of Arabic and a dialectal identification task. Our analysis enlightens interesting findings such as: i) word morphology is learned at the lower and middle layers, ii) while syntactic dependencies are predominantly captured at the higher layers, iii) despite a large overlap in their vocabulary, the MSA-based models fail to capture the nuances of Arabic dialects, iv) we found that neurons in embedding layers are polysemous in nature, while the neurons in middle layers are exclusive to specific properties.

@inproceedings{abdelali-etal-2022-post,

title = "Post-hoc analysis of {A}rabic transformer models",

author = "Abdelali, Ahmed and

Durrani, Nadir and

Dalvi, Fahim and

Sajjad, Hassan",

booktitle = "Proceedings of the Fifth BlackboxNLP Workshop on Analyzing and Interpreting Neural Networks for NLP",

month = dec,

year = "2022",

address = "Abu Dhabi, United Arab Emirates (Hybrid)",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.blackboxnlp-1.8",

doi = "10.18653/v1/2022.blackboxnlp-1.8",

pages = "91--103",

abstract = "Arabic is a Semitic language which is widely spoken with many dialects. Given the success of pre-trained language models, many transformer models trained on Arabic and its dialects have surfaced. While there have been an extrinsic evaluation of these models with respect to downstream NLP tasks, no work has been carried out to analyze and compare their internal representations. We probe how linguistic information is encoded in the transformer models, trained on different Arabic dialects. We perform a layer and neuron analysis on the models using morphological tagging tasks for different dialects of Arabic and a dialectal identification task. Our analysis enlightens interesting findings such as: i) word morphology is learned at the lower and middle layers, ii) while syntactic dependencies are predominantly captured at the higher layers, iii) despite a large overlap in their vocabulary, the MSA-based models fail to capture the nuances of Arabic dialects, iv) we found that neurons in embedding layers are polysemous in nature, while the neurons in middle layers are exclusive to specific properties.",

}

NatiQ: An End-to-end Text-to-Speech System for Arabic

NatiQ is end-to-end text-to-speech system for Arabic. Our speech synthesizer uses an encoder-decoder architecture with attention. We used both tacotron-based models (tacotron- 1 and tacotron-2) and the faster transformer model for generating mel-spectrograms from characters. We concatenated Tacotron1 with the WaveRNN vocoder, Tacotron2 with the WaveGlow vocoder and ESPnet transformer with the parallel wavegan vocoder to synthesize waveforms from the spectrograms. We used in-house speech data for two voices: 1) neu- tral male {``}Hamza{''}- narrating general content and news, and 2) expressive female {``}Amina{''}- narrating children story books to train our models. Our best systems achieve an aver- age Mean Opinion Score (MOS) of 4.21 and 4.40 for Amina and Hamza respectively. The objective evaluation of the systems using word and character error rate (WER and CER) as well as the response time measured by real- time factor favored the end-to-end architecture ESPnet. NatiQ demo is available online at https://tts.qcri.org.

@inproceedings{abdelali-etal-2022-natiq,

title = "{N}ati{Q}: An End-to-end Text-to-Speech System for {A}rabic",

author = "Abdelali, Ahmed and

Durrani, Nadir and

Demiroglu, Cenk and

Dalvi, Fahim and

Mubarak, Hamdy and

Darwish, Kareem",

booktitle = "Proceedings of the The Seventh Arabic Natural Language Processing Workshop (WANLP)",

month = dec,

year = "2022",

address = "Abu Dhabi, United Arab Emirates (Hybrid)",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.wanlp-1.38",

doi = "10.18653/v1/2022.wanlp-1.38",

pages = "394--398",

abstract = "NatiQ is end-to-end text-to-speech system for Arabic. Our speech synthesizer uses an encoder-decoder architecture with attention. We used both tacotron-based models (tacotron- 1 and tacotron-2) and the faster transformer model for generating mel-spectrograms from characters. We concatenated Tacotron1 with the WaveRNN vocoder, Tacotron2 with the WaveGlow vocoder and ESPnet transformer with the parallel wavegan vocoder to synthesize waveforms from the spectrograms. We used in-house speech data for two voices: 1) neu- tral male {``}Hamza{''}- narrating general content and news, and 2) expressive female {``}Amina{''}- narrating children story books to train our models. Our best systems achieve an aver- age Mean Opinion Score (MOS) of 4.21 and 4.40 for Amina and Hamza respectively. The objective evaluation of the systems using word and character error rate (WER and CER) as well as the response time measured by real- time factor favored the end-to-end architecture ESPnet. NatiQ demo is available online at https://tts.qcri.org.",

}

Neuron-level Interpretation of Deep NLP Models: A Survey

The proliferation of Deep Neural Networks in various domains has seen an increased need for interpretability of these models. Preliminary work done along this line, and papers that surveyed such, are focused on high-level representation analysis. However, a recent branch of work has concentrated on interpretability at a more granular level of analyzing neurons within these models. In this paper, we survey the work done on neuron analysis including: i) methods to discover and understand neurons in a network; ii) evaluation methods; iii) major findings including cross architectural comparisons that neuron analysis has unraveled; iv) applications of neuron probing such as: controlling the model, domain adaptation, and so forth; and v) a discussion on open issues and future research directions.

@article{sajjad-etal-2022-neuron,

title = "Neuron-level Interpretation of Deep {NLP} Models: A Survey",

author = "Sajjad, Hassan and

Durrani, Nadir and

Dalvi, Fahim",

journal = "Transactions of the Association for Computational Linguistics",

volume = "10",

year = "2022",

address = "Cambridge, MA",

publisher = "MIT Press",

url = "https://aclanthology.org/2022.tacl-1.74",

doi = "10.1162/tacl_a_00519",

pages = "1285--1303",

abstract = "The proliferation of Deep Neural Networks in various domains has seen an increased need for interpretability of these models. Preliminary work done along this line, and papers that surveyed such, are focused on high-level representation analysis. However, a recent branch of work has concentrated on interpretability at a more granular level of analyzing neurons within these models. In this paper, we survey the work done on neuron analysis including: i) methods to discover and understand neurons in a network; ii) evaluation methods; iii) major findings including cross architectural comparisons that neuron analysis has unraveled; iv) applications of neuron probing such as: controlling the model, domain adaptation, and so forth; and v) a discussion on open issues and future research directions.",

}

Effect of Post-processing on Contextualized Word Representations

Post-processing of static embedding has been shown to improve their performance on both lexical and sequence-level tasks. However, post-processing for contextualized embeddings is an under-studied problem. In this work, we question the usefulness of post-processing for contextualized embeddings obtained from different layers of pre-trained language models. More specifically, we standardize individual neuron activations using z-score, min-max normalization, and by removing top principal components using the all-but-the-top method. Additionally, we apply unit length normalization to word representations. On a diverse set of pre-trained models, we show that post-processing unwraps vital information present in the representations for both lexical tasks (such as word similarity and analogy) and sequence classification tasks. Our findings raise interesting points in relation to the research studies that use contextualized representations, and suggest z-score normalization as an essential step to consider when using them in an application.

@inproceedings{sajjad-etal-2022-effect,

title = "Effect of Post-processing on Contextualized Word Representations",

author = "Sajjad, Hassan and

Alam, Firoj and

Dalvi, Fahim and

Durrani, Nadir",

booktitle = "Proceedings of the 29th International Conference on Computational Linguistics",

month = oct,

year = "2022",

address = "Gyeongju, Republic of Korea",

publisher = "International Committee on Computational Linguistics",

url = "https://aclanthology.org/2022.coling-1.277",

pages = "3127--3142",

abstract = "Post-processing of static embedding has been shown to improve their performance on both lexical and sequence-level tasks. However, post-processing for contextualized embeddings is an under-studied problem. In this work, we question the usefulness of post-processing for contextualized embeddings obtained from different layers of pre-trained language models. More specifically, we standardize individual neuron activations using z-score, min-max normalization, and by removing top principal components using the all-but-the-top method. Additionally, we apply unit length normalization to word representations. On a diverse set of pre-trained models, we show that post-processing unwraps vital information present in the representations for both lexical tasks (such as word similarity and analogy) and sequence classification tasks. Our findings raise interesting points in relation to the research studies that use contextualized representations, and suggest z-score normalization as an essential step to consider when using them in an application.",

}

Analyzing Encoded Concepts in Transformer Language Models

We propose a novel framework ConceptX, to analyze how latent concepts are encoded in representations learned within pre-trained lan-guage models. It uses clustering to discover the encoded concepts and explains them by aligning with a large set of human-defined concepts. Our analysis on seven transformer language models reveal interesting insights: i) the latent space within the learned representations overlap with different linguistic concepts to a varying degree, ii) the lower layers in the model are dominated by lexical concepts (e.g., affixation) and linguistic ontologies (e.g. Word-Net), whereas the core-linguistic concepts (e.g., morphology, syntactic relations) are better represented in the middle and higher layers, iii) some encoded concepts are multi-faceted and cannot be adequately explained using the existing human-defined concepts.

@inproceedings{sajjad-etal-2022-analyzing,

title = "Analyzing Encoded Concepts in Transformer Language Models",

author = "Sajjad, Hassan and

Durrani, Nadir and

Dalvi, Fahim and

Alam, Firoj and

Khan, Abdul and

Xu, Jia",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies",

month = jul,

year = "2022",

address = "Seattle, United States",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-main.225",

doi = "10.18653/v1/2022.naacl-main.225",

pages = "3082--3101",

abstract = "We propose a novel framework ConceptX, to analyze how latent concepts are encoded in representations learned within pre-trained lan-guage models. It uses clustering to discover the encoded concepts and explains them by aligning with a large set of human-defined concepts. Our analysis on seven transformer language models reveal interesting insights: i) the latent space within the learned representations overlap with different linguistic concepts to a varying degree, ii) the lower layers in the model are dominated by lexical concepts (e.g., affixation) and linguistic ontologies (e.g. Word-Net), whereas the core-linguistic concepts (e.g., morphology, syntactic relations) are better represented in the middle and higher layers, iii) some encoded concepts are multi-faceted and cannot be adequately explained using the existing human-defined concepts.",

}

Discovering Latent Concepts Learned in BERT

A large number of studies that analyze deep neural network models and their ability to encode various linguistic and non-linguistic concepts provide an interpretation of the inner mechanics of these models. The scope of the analyses is limited to pre-defined concepts that reinforce the traditional linguistic knowledge and do not reflect on how novel concepts are learned by the model. We address this limitation by discovering and analyzing latent concepts learned in neural network models in an unsupervised fashion and provide interpretations from the model's perspective. In this work, we study: i) what latent concepts exist in the pre-trained BERT model, ii) how the discovered latent concepts align or diverge from classical linguistic hierarchy and iii) how the latent concepts evolve across layers. Our findings show: i) a model learns novel concepts (e.g. animal categories and demographic groups), which do not strictly adhere to any pre-defined categorization (e.g. POS, semantic tags), ii) several latent concepts are based on multiple properties which may include semantics, syntax, and morphology, iii) the lower layers in the model dominate in learning shallow lexical concepts while the higher layers learn semantic relations and iv) the discovered latent concepts highlight potential biases learned in the model. We also release a novel BERT ConceptNet dataset consisting of 174 concept labels and 1M annotated instances.

@inproceedings{

dalvi2022discovering,

title={Discovering Latent Concepts Learned in {BERT}},

author={Fahim Dalvi

and Abdul Rafae Khan

and Firoj Alam

and Nadir Durrani

and Jia Xu

and Hassan Sajjad},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=POTMtpYI1xH}

}

2021

Fighting the COVID-19 Infodemic: Modeling the Perspective of Journalists, Fact-Checkers, Social Media Platforms, Policy Makers, and the Society

With the emergence of the COVID-19 pandemic, the political and the medical aspects of disinformation merged as the problem got elevated to a whole new level to become the first global infodemic. Fighting this infodemic has been declared one of the most important focus areas of the World Health Organization, with dangers ranging from promoting fake cures, rumors, and conspiracy theories to spreading xenophobia and panic. Addressing the issue requires solving a number of challenging problems such as identifying messages containing claims, determining their check-worthiness and factuality, and their potential to do harm as well as the nature of that harm, to mention just a few. To address this gap, we release a large dataset of 16K manually annotated tweets for fine-grained disinformation analysis that (i) focuses on COVID-19, (ii) combines the perspectives and the interests of journalists, fact-checkers, social media platforms, policy makers, and society, and (iii) covers Arabic, Bulgarian, Dutch, and English. Finally, we show strong evaluation results using pretrained Transformers, thus confirming the practical utility of the dataset in monolingual vs. multilingual, and single task vs. multitask settings.

@inproceedings{alam-etal-2021-fighting-covid,

title = "Fighting the {COVID}-19 Infodemic: Modeling the Perspective of Journalists, Fact-Checkers, Social Media Platforms, Policy Makers, and the Society",

author = "Alam, Firoj and

Shaar, Shaden and

Dalvi, Fahim and

Sajjad, Hassan and

Nikolov, Alex and

Mubarak, Hamdy and

Da San Martino, Giovanni and

Abdelali, Ahmed and

Durrani, Nadir and

Darwish, Kareem and

Al-Homaid, Abdulaziz and

Zaghouani, Wajdi and

Caselli, Tommaso and

Danoe, Gijs and

Stolk, Friso and

Bruntink, Britt and

Nakov, Preslav",

booktitle = "Findings of the Association for Computational Linguistics: EMNLP 2021",

month = nov,

year = "2021",

address = "Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.findings-emnlp.56",

doi = "10.18653/v1/2021.findings-emnlp.56",

pages = "611--649",

abstract = "With the emergence of the COVID-19 pandemic, the political and the medical aspects of disinformation merged as the problem got elevated to a whole new level to become the first global infodemic. Fighting this infodemic has been declared one of the most important focus areas of the World Health Organization, with dangers ranging from promoting fake cures, rumors, and conspiracy theories to spreading xenophobia and panic. Addressing the issue requires solving a number of challenging problems such as identifying messages containing claims, determining their check-worthiness and factuality, and their potential to do harm as well as the nature of that harm, to mention just a few. To address this gap, we release a large dataset of 16K manually annotated tweets for fine-grained disinformation analysis that (i) focuses on COVID-19, (ii) combines the perspectives and the interests of journalists, fact-checkers, social media platforms, policy makers, and society, and (iii) covers Arabic, Bulgarian, Dutch, and English. Finally, we show strong evaluation results using pretrained Transformers, thus confirming the practical utility of the dataset in monolingual vs. multilingual, and single task vs. multitask settings.",

}

How transfer learning impacts linguistic knowledge in deep NLP models?

Transfer learning from pre-trained neural language models towards downstream tasks has been a predominant theme in NLP recently. Several researchers have shown that deep NLP models learn non-trivial amount of linguistic knowledge, captured at different layers of the model. We investigate how fine-tuning towards downstream NLP tasks impacts the learned linguistic knowledge. We carry out a study across popular pre-trained models BERT, RoBERTa and XLNet using layer and neuron-level diagnostic classifiers. We found that for some GLUE tasks, the network relies on the core linguistic information and preserve it deeper in the network, while for others it forgets. Linguistic information is distributed in the pre-trained language models but becomes localized to the lower layers post-fine-tuning, reserving higher layers for the task specific knowledge. The pattern varies across architectures, with BERT retaining linguistic information relatively deeper in the network compared to RoBERTa and XLNet, where it is predominantly delegated to the lower layers.

@inproceedings{durrani-etal-2021-transfer,

title = "How transfer learning impacts linguistic knowledge in deep {NLP} models?",

author = "Durrani, Nadir and

Sajjad, Hassan and

Dalvi, Fahim",

booktitle = "Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021",

month = aug,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.findings-acl.438",

doi = "10.18653/v1/2021.findings-acl.438",

pages = "4947--4957",

}

Fighting the COVID-19 Infodemic in Social Media: A Holistic Perspective and a Call to Arms

With the outbreak of the COVID-19 pandemic, people turned to social media to read and to share timely information including statistics, warnings, advice, and inspirational stories. Unfortunately, alongside all this useful information, there was also a new blending of medical and political misinformation and disinformation, which gave rise to the first global infodemic. While fighting this infodemic is typically thought of in terms of factuality, the problem is much broader as malicious content includes not only fake news, rumors, and conspiracy theories, but also promotion of fake cures, panic, racism, xenophobia, and mistrust in the authorities, among others. This is a complex problem that needs a holistic approach combining the perspectives of journalists, fact-checkers, policymakers, government entities, social media platforms, and society as a whole. With this in mind, we define an annotation schema and detailed annotation instructions that reflect these perspectives. We further deploy a multilingual annotation platform, and we issue a call to arms to the research community and beyond to join the fight by supporting our crowdsourcing annotation efforts. We perform initial annotations using the annotation schema, and our initial experiments demonstrated sizable improvements over the baselines.

@article{Alam_covid_infodemic_2021,

title={Fighting the COVID-19 Infodemic in Social Media: A Holistic Perspective and a Call to Arms},

author={Alam, Firoj and Dalvi, Fahim and Shaar, Shaden and Durrani, Nadir and Mubarak, Hamdy and Nikolov, Alex and Da San Martino, Giovanni and Abdelali, Ahmed and Sajjad, Hassan and Darwish, Kareem and Nakov, Preslav},

volume={15},

url={https://ojs.aaai.org/index.php/ICWSM/article/view/18114},

number={1},

journal={Proceedings of the International AAAI Conference on Web and Social Media},

year={2021},

month={May},

pages={913-922},

abstractNote={With the outbreak of the COVID-19 pandemic, people turned to social media to read and to share timely information including statistics, warnings, advice, and inspirational stories. Unfortunately, alongside all this useful information, there was also a new blending of medical and political misinformation and disinformation, which gave rise to the first global infodemic. While fighting this infodemic is typically thought of in terms of factuality, the problem is much broader as malicious content includes not only fake news, rumors, and conspiracy theories, but also promotion of fake cures, panic, racism, xenophobia, and mistrust in the authorities, among others. This is a complex problem that needs a holistic approach combining the perspectives of journalists, fact-checkers, policymakers, government entities, social media platforms, and society as a whole. With this in mind, we define an annotation schema and detailed annotation instructions that reflect these perspectives. We further deploy a multilingual annotation platform, and we issue a call to arms to the research community and beyond to join the fight by supporting our crowdsourcing annotation efforts. We perform initial annotations using the annotation schema, and our initial experiments demonstrated sizable improvements over the baselines.}

}

Fine-grained Interpretation and Causation Analysis in Deep NLP Models

Deep neural networks have constantly pushed the state-of-the-art performance in natural language processing and are considered as the de-facto modeling approach in solving complex NLP tasks such as machine translation, summarization and question-answering. Despite the proven efficacy of deep neural networks at-large, their opaqueness is a major cause of concern. In this tutorial, we will present research work on interpreting fine-grained components of a neural network model from two perspectives, i) fine-grained interpretation, and ii) causation analysis. The former is a class of methods to analyze neurons with respect to a desired language concept or a task. The latter studies the role of neurons and input features in explaining the decisions made by the model. We will also discuss how interpretation methods and causation analysis can connect towards better interpretability of model prediction. Finally, we will walk you through various toolkits that facilitate fine-grained interpretation and causation analysis of neural models.

@inproceedings{sajjad-etal-2021-fine,

title = "Fine-grained Interpretation and Causation Analysis in Deep {NLP} Models",

author = "Sajjad, Hassan and

Kokhlikyan, Narine and

Dalvi, Fahim and

Durrani, Nadir",

booktitle = "Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Tutorials",

month = jun,

year = "2021",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2021.naacl-tutorials.2",

pages = "5--10",

abstract = "Deep neural networks have constantly pushed the state-of-the-art performance in natural language processing and are considered as the de-facto modeling approach in solving complex NLP tasks such as machine translation, summarization and question-answering. Despite the proven efficacy of deep neural networks at-large, their opaqueness is a major cause of concern. In this tutorial, we will present research work on interpreting fine-grained components of a neural network model from two perspectives, i) fine-grained interpretation, and ii) causation analysis. The former is a class of methods to analyze neurons with respect to a desired language concept or a task. The latter studies the role of neurons and input features in explaining the decisions made by the model. We will also discuss how interpretation methods and causation analysis can connect towards better interpretability of model prediction. Finally, we will walk you through various toolkits that facilitate fine-grained interpretation and causation analysis of neural models.",

}2020

AraBench: Benchmarking Dialectal Arabic-English Machine Translation

Low-resource machine translation suffers from the scarcity of training data and the unavailability of standard evaluation sets. While a number of research efforts target the former, the unavailability of evaluation benchmarks remain a major hindrance in tracking the progress in low-resource machine translation. In this paper, we introduce AraBench, an evaluation suite for dialectal Arabic to English machine translation. Compared to Modern Standard Arabic, Arabic dialects are challenging due to their spoken nature, non-standard orthography, and a large variation in dialectness. To this end, we pool together already available Dialectal Arabic-English resources and additionally build novel test sets. AraBench offers 4 coarse, 15 fine-grained and 25 city-level dialect categories, belonging to diverse genres, such as media, chat, religion and travel with varying level of dialectness. We report strong baselines using several training settings: fine-tuning, back-translation and data augmentation. The evaluation suite opens a wide range of research frontiers to push efforts in low-resource machine translation, particularly Arabic dialect translation. The evaluation suite and the dialectal system are publicly available for research purposes.

@inproceedings{sajjad-etal-2020-arabench,

title = "{A}ra{B}ench: Benchmarking Dialectal {A}rabic-{E}nglish Machine Translation",

author = "Sajjad, Hassan and

Abdelali, Ahmed and

Durrani, Nadir and

Dalvi, Fahim",

booktitle = "Proceedings of the 28th International Conference on Computational Linguistics",

month = dec,

year = "2020",

address = "Barcelona, Spain (Online)",

publisher = "International Committee on Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.coling-main.447",

doi = "10.18653/v1/2020.coling-main.447",

pages = "5094--5107",

abstract = "Low-resource machine translation suffers from the scarcity of training data and the unavailability of standard evaluation sets. While a number of research efforts target the former, the unavailability of evaluation benchmarks remain a major hindrance in tracking the progress in low-resource machine translation. In this paper, we introduce AraBench, an evaluation suite for dialectal Arabic to English machine translation. Compared to Modern Standard Arabic, Arabic dialects are challenging due to their spoken nature, non-standard orthography, and a large variation in dialectness. To this end, we pool together already available Dialectal Arabic-English resources and additionally build novel test sets. AraBench offers 4 coarse, 15 fine-grained and 25 city-level dialect categories, belonging to diverse genres, such as media, chat, religion and travel with varying level of dialectness. We report strong baselines using several training settings: fine-tuning, back-translation and data augmentation. The evaluation suite opens a wide range of research frontiers to push efforts in low-resource machine translation, particularly Arabic dialect translation. The evaluation suite and the dialectal system are publicly available for research purposes.",

}

Analyzing Individual Neurons in Pre-trained Language Models

While a lot of analysis has been carried to demonstrate linguistic knowledge captured by the representations learned within deep NLP models, very little attention has been paid towards individual neurons. We carry out a neuron-level analysis using core linguistic tasks of predicting morphology, syntax and semantics, on pre-trained language models, with questions like: i) do individual neurons in pretrained models capture linguistic information? ii) which parts of the network learn more about certain linguistic phenomena? iii) how distributed or focused is the information? and iv) how do various architectures differ in learning these properties? We found small subsets of neurons to predict linguistic tasks, with lower level tasks (such as morphology) localized in fewer neurons, compared to higher level task of predicting syntax. Our study reveals interesting cross architectural comparisons. For example, we found neurons in XLNet to be more localized and disjoint when predicting properties compared to BERT and others, where they are more distributed and coupled.

@inproceedings{durrani-etal-2020-analyzing,

title = "Analyzing Individual Neurons in Pre-trained Language Models",

author = "Durrani, Nadir and

Sajjad, Hassan and

Dalvi, Fahim and

Belinkov, Yonatan",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP)",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.emnlp-main.395",

doi = "10.18653/v1/2020.emnlp-main.395",

pages = "4865--4880",

abstract = "While a lot of analysis has been carried to demonstrate linguistic knowledge captured by the representations learned within deep NLP models, very little attention has been paid towards individual neurons.We carry outa neuron-level analysis using core linguistic tasks of predicting morphology, syntax and semantics, on pre-trained language models, with questions like: i) do individual neurons in pre-trained models capture linguistic information? ii) which parts of the network learn more about certain linguistic phenomena? iii) how distributed or focused is the information? and iv) how do various architectures differ in learning these properties? We found small subsets of neurons to predict linguistic tasks, with lower level tasks (such as morphology) localized in fewer neurons, compared to higher level task of predicting syntax. Our study also reveals interesting cross architectural comparisons. For example, we found neurons in XLNet to be more localized and disjoint when predicting properties compared to BERT and others, where they are more distributed and coupled.",

}

Analyzing Redundancy in Pretrained Transformer Models

Transformer-based deep NLP models are trained using hundreds of millions of parameters, limiting their applicability in computationally constrained environments. In this paper, we study the cause of these limitations by defining a notion of Redundancy, which we categorize into two classes: General Redundancy and Task-specific Redundancy. We dissect two popular pretrained models, BERT and XLNet, studying how much redundancy they exhibit at a representation-level and at a more fine-grained neuron-level. Our analysis reveals interesting insights, such as: i) 85% of the neurons across the network are redundant and ii) at least 92% of them can be removed when optimizing towards a downstream task. Based on our analysis, we present an efficient feature-based transfer learning procedure, which maintains 97% performance while using at-most 10% of the original neurons.

@inproceedings{dalvi-etal-2020-analyzing,

title = "Analyzing Redundancy in Pretrained Transformer Models",

author = "Dalvi, Fahim and

Sajjad, Hassan and

Durrani, Nadir and

Belinkov, Yonatan",

booktitle = "Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP)",

month = nov,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.emnlp-main.398",

doi = "10.18653/v1/2020.emnlp-main.398",

pages = "4908--4926",

abstract = "Transformer-based deep NLP models are trained using hundreds of millions of parameters, limiting their applicability in computationally constrained environments. In this paper, we study the cause of these limitations by defining a notion of Redundancy, which we categorize into two classes: General Redundancy and Task-specific Redundancy. We dissect two popular pretrained models, BERT and XLNet, studying how much redundancy they exhibit at a representation-level and at a more fine-grained neuron-level. Our analysis reveals interesting insights, such as i) 85{\%} of the neurons across the network are redundant and ii) at least 92{\%} of them can be removed when optimizing towards a downstream task. Based on our analysis, we present an efficient feature-based transfer learning procedure, which maintains 97{\%} performance while using at-most 10{\%} of the original neurons.",

}

Similarity Analysis of Contextual Word Representation Models

This paper investigates contextual word representation models from the lens of similarity analysis. Given a collection of trained models, we measure the similarity of their internal representations and attention. Critically, these models come from vastly different architectures. We use existing and novel similarity measures that aim to gauge the level of localization of information in the deep models, and facilitate the investigation of which design factors affect model similarity, without requiring any external linguistic annotation. The analysis reveals that models within the same family are more similar to one another, as may be expected. Surprisingly, different architectures have rather similar representations, but different individual neurons. We also observed differences in information localization in lower and higher layers and found that higher layers are more affected by fine-tuning on downstream tasks.

@inproceedings{wu-etal-2020-similarity,

title = "Similarity Analysis of Contextual Word Representation Models",

author = "Wu, John and

Belinkov, Yonatan and

Sajjad, Hassan and

Durrani, Nadir and

Dalvi, Fahim and

Glass, James",

booktitle = "Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics",

month = jul,

year = "2020",

address = "Online",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/2020.acl-main.422",

doi = "10.18653/v1/2020.acl-main.422",

pages = "4638--4655",

abstract = "This paper investigates contextual word representation models from the lens of similarity analysis. Given a collection of trained models, we measure the similarity of their internal representations and attention. Critically, these models come from vastly different architectures. We use existing and novel similarity measures that aim to gauge the level of localization of information in the deep models, and facilitate the investigation of which design factors affect model similarity, without requiring any external linguistic annotation. The analysis reveals that models within the same family are more similar to one another, as may be expected. Surprisingly, different architectures have rather similar representations, but different individual neurons. We also observed differences in information localization in lower and higher layers and found that higher layers are more affected by fine-tuning on downstream tasks.",

}

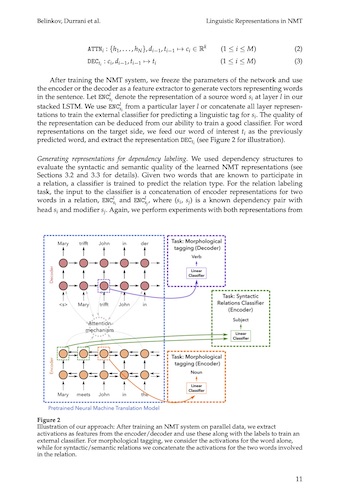

On the Linguistic Representational Power of Neural Machine Translation Models

Despite the recent success of deep neural networks in natural language processing and other spheres of artificial intelligence, their interpretability remains a challenge. We analyze the representations learned by neural machine translation (NMT) models at various levels of granularity and evaluate their quality through relevant extrinsic properties. In particular, we seek answers to the following questions: (i) How accurately is word structure captured within the learned representations, which is an important aspect in translating morphologically rich languages? (ii) Do the representations capture long-range dependencies, and effectively handle syntactically divergent languages? (iii) Do the representations capture lexical semantics? We conduct a thorough investigation along several parameters: (i) Which layers in the architecture capture each of these linguistic phenomena; (ii) How does the choice of translation unit (word, character, or subword unit) impact the linguistic properties captured by the underlying representations? (iii) Do the encoder and decoder learn differently and independently? (iv) Do the representations learned by multilingual NMT models capture the same amount of linguistic information as their bilingual counterparts? Our data-driven, quantitative evaluation illuminates important aspects in NMT models and their ability to capture various linguistic phenomena. We show that deep NMT models trained in an end-to-end fashion, without being provided any direct supervision during the training process, learn a non-trivial amount of linguistic information. Notable findings include the following observations: (i) Word morphology and part-of-speech information are captured at the lower layers of the model; (ii) In contrast, lexical semantics or non-local syntactic and semantic dependencies are better represented at the higher layers of the model; (iii) Representations learned using characters are more informed about word-morphology compared to those learned using subword units; and (iv) Representations learned by multilingual models are richer compared to bilingual models.

@article{belinkov-etal-2020-linguistic,

title = "On the Linguistic Representational Power of Neural Machine Translation Models",

author = "Belinkov, Yonatan and

Durrani, Nadir and

Dalvi, Fahim and

Sajjad, Hassan and

Glass, James",

journal = "Computational Linguistics",

volume = "46",

number = "1",

month = mar,

year = "2020",

url = "https://www.aclweb.org/anthology/2020.cl-1.1",

doi = "10.1162/coli_a_00367",

pages = "1--52"

}

2019

Rumour verification through recurring information and an inner-attention mechanism

Verification of online rumours is becoming an increasingly important task with the prevalence of event discussions on social media platforms. This paper proposes an inner-attention-based neural network model that uses frequent, recurring terms from past rumours to classify a newly emerging rumour as true, false or unverified. Unlike other methods proposed in related work, our model uses the source rumour alone without any additional information, such as user replies to the rumour or additional feature engineering. Our method outperforms the current state-of-the-art methods on benchmark datasets (RumourEval2017) by 3% accuracy and 6% F-1 leading to 60.7% accuracy and 61.6% F-1. We also compare our attention-based method to two similar models which however do not make use of recurrent terms. The attention-based method guided by frequent recurring terms outperforms this baseline on the same dataset, indicating that the recurring terms injected by the attention mechanism have high positive impact on distinguishing between true and false rumours. Furthermore, we perform out-of-domain evaluations and show that our model is indeed highly competitive compared to the baselines on a newly released RumourEval2019 dataset and also achieves the best performance on classifying fake and legitimate news headlines.

@article{AKER2019100045,

title = "Rumour verification through recurring information and an inner-attention mechanism",

journal = "Online Social Networks and Media",

volume = "13",

pages = "100045",

year = "2019",

issn = "2468-6964",

doi = "https://doi.org/10.1016/j.osnem.2019.07.001",

url = "http://www.sciencedirect.com/science/article/pii/S2468696419300588",

author = "Ahmet Aker and Alfred Sliwa and Fahim Dalvi and Kalina Bontcheva",

keywords = "Rumour Verification, Inner Attention Model, Recurring Terms in Rumours"

}

One Size Does Not Fit All: Comparing NMT Representations of Different Granularities

Recent work has shown that contextualized word representations derived from neural machine translation are a viable alternative to such from simple word predictions tasks. This is because the internal understanding that needs to be built in order to be able to translate from one language to another is much more comprehensive. Unfortunately, computational and memory limitations as of present prevent NMT models from using large word vocabularies, and thus alternatives such as subword units (BPE and morphological segmentations) and characters have been used. Here we study the impact of using different kinds of units on the quality of the resulting representations when used to model morphology, syntax, and semantics. We found that while representations derived from subwords are slightly better for modeling syntax, character-based representations are superior for modeling morphology and are also more robust to noisy input.

@inproceedings{durrani-etal-2019-one,

title = "One Size Does Not Fit All: Comparing {NMT} Representations of Different Granularities",

author = "Durrani, Nadir and

Dalvi, Fahim and

Sajjad, Hassan and

Belinkov, Yonatan and

Nakov, Preslav",

booktitle = "Proceedings of the 2019 Conference of the North {A}merican Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers)",

month = jun,

year = "2019",

address = "Minneapolis, Minnesota",

publisher = "Association for Computational Linguistics",

url = "https://www.aclweb.org/anthology/N19-1154",

doi = "10.18653/v1/N19-1154",

pages = "1504--1516",

abstract = "Recent work has shown that contextualized word representations derived from neural machine translation are a viable alternative to such from simple word predictions tasks. This is because the internal understanding that needs to be built in order to be able to translate from one language to another is much more comprehensive. Unfortunately, computational and memory limitations as of present prevent NMT models from using large word vocabularies, and thus alternatives such as subword units (BPE and morphological segmentations) and characters have been used. Here we study the impact of using different kinds of units on the quality of the resulting representations when used to model morphology, syntax, and semantics. We found that while representations derived from subwords are slightly better for modeling syntax, character-based representations are superior for modeling morphology and are also more robust to noisy input.",

}

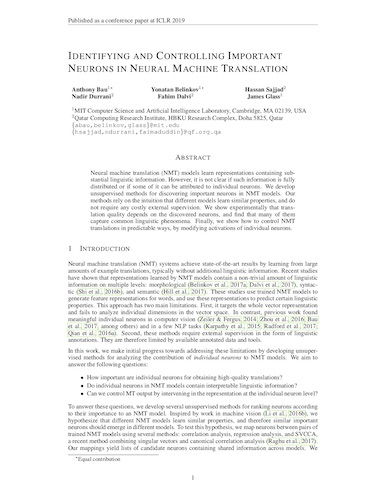

Identifying And Controlling Important Neurons In Neural Machine Translation

Neural machine translation (NMT) models learn representations containing substantial linguistic information. However, it is not clear if such information is fully distributed or if some of it can be attributed to individual neurons. We develop unsupervised methods for discovering important neurons in NMT models. Our methods rely on the intuition that different models learn similar properties, and do not require any costly external supervision. We show experimentally that translation quality depends on the discovered neurons, and find that many of them capture common linguistic phenomena. Finally, we show how to control NMT translations in predictable ways, by modifying activations of individual neurons.

@inproceedings{

bau2018identifying,

title={Identifying and Controlling Important Neurons in Neural Machine Translation},

author={Anthony Bau

and Yonatan Belinkov

and Hassan Sajjad

and Nadir Durrani

and Fahim Dalvi

and James Glass},

booktitle={International Conference on Learning Representations},

year={2019},

url={https://openreview.net/forum?id=H1z-PsR5KX},

}

What Is One Grain of Sand in the Desert? Analyzing Individual Neurons in Deep NLP Models

Despite the remarkable evolution of deep neural networks in natural language processing (NLP), their interpretability remains a challenge. Previous work largely focused on what these models learn at the representation level. We break this analysis down further and study individual dimensions (neurons) in the vector representation learned by end-to-end neural models in NLP tasks. We propose two methods: Linguistic Correlation Analysis, based on a supervised method to extract the most relevant neurons with respect to an extrinsic task, and Cross-model Correlation Analysis, an unsupervised method to extract salient neurons w.r.t. the model itself. We evaluate the effectiveness of our techniques by ablating the identified neurons and reevaluating the network’s performance for two tasks: neural machine translation (NMT) and neural language modeling (NLM). We further present a comprehensive analysis of neurons with the aim to address the following questions: i) how localized or distributed are different linguistic properties in the models? ii) are certain neurons exclusive to some properties and not others? iii) is the information more or less distributed in NMT vs. NLM? and iv) how important are the neurons identified through the linguistic correlation method to the overall task? Our code is publicly available as part of the NeuroX toolkit (Dalvi et al. 2019a). This paper is a non-archived version of the paper published at AAAI (Dalvi et al. 2019b).

@article{dalvi2019individualneurons,

title={What Is One Grain of Sand in the Desert? Analyzing Individual Neurons in Deep NLP Models},

author={Dalvi, Fahim and Durrani, Nadir and Sajjad, Hassan and Belinkov, Yonatan and Bau, Anthony and Glass, James},

volume={33},

url={https://ojs.aaai.org/index.php/AAAI/article/view/4592},

DOI={10.1609/aaai.v33i01.33016309},

number={01},

journal={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2019},

month={Jul.},

pages={6309-6317},